Tackling Bias in AI Content Moderation: A Guide for Social Media Professionals

Understanding Bias in AI Content Moderation

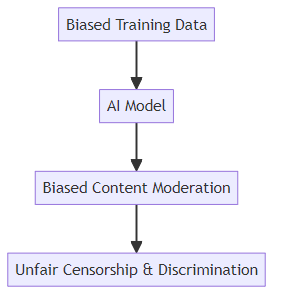

Did you know ai content moderation can be unintentionally biased? These biases can lead to unfair censorship and discrimination, eroding user trust. (Understanding The Difference Between AI Bias, Censorship ...)

ai models learn from data: If this data reflects existing societal biases, the ai will perpetuate and amplify these biases. For example, if a hate speech detection algorithm is trained primarily on examples of hate speech targeting one demographic group, it may be less effective at identifying hate speech targeting other groups.

Bias manifests in various forms: This includes gender, racial, cultural, and political biases. Consider a facial recognition system that misidentifies people of color at higher rates due to a lack of diverse training data.

Unchecked bias leads to unfair outcomes: This can result in censorship, discrimination, and a loss of trust. Imagine a content moderation system that disproportionately removes content related to certain political viewpoints, creating an echo chamber effect.

Biased training data: Datasets used to train ai models may contain skewed representation of certain demographic groups or viewpoints. For instance, a content moderation tool trained primarily on Western content might struggle to understand cultural nuances in other regions. The six types of ai content moderation and how they work include rule-based systems, machine learning, natural language processing, computer vision, sentiment analysis, and keyword filtering.

Algorithmic bias: The design of the ai algorithm itself can inadvertently introduce bias, even with seemingly neutral data. For example, an algorithm designed to prioritize "engaging" content might inadvertently amplify sensational or controversial posts, regardless of their factual accuracy.

Human annotation bias: Human moderators who label training data may inject their own subjective biases into the process. If human moderators consistently flag content from a particular group as "offensive," the ai may learn to do the same, even if the content is not genuinely harmful.

Facial recognition software misidentifying people of color at higher rates.

Hate speech detection algorithms flagging innocuous posts from minority communities while missing genuinely hateful content targeting those same groups.

Content moderation systems disproportionately removing content related to certain political viewpoints.

Understanding how bias creeps into ai systems is the first step in tackling this critical issue. In the next section, we will explore specific strategies for identifying and mitigating bias in ai content moderation.

Identifying Sources of Bias in Your Content Moderation Systems

Ai content moderation systems are not immune to bias, and identifying the sources of this bias is a crucial step towards ensuring fairness. But, how do you pinpoint where these biases originate?

First, examine the demographic composition of your training data. Look for under-representation or over-representation of certain groups. For example, in healthcare, ai models trained primarily on data from one ethnic group may not perform accurately for other groups.

Next, analyze the content of the data for potentially biased language, stereotypes, or harmful associations. A content moderation tool trained mainly on Western content could struggle with cultural nuances in other regions.

Finally, implement data augmentation techniques to balance the dataset and address representation gaps. This involves creating new data points by modifying existing ones or synthesizing entirely new data.

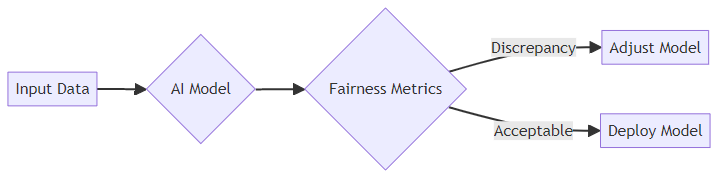

Next, measure the accuracy, precision, and recall of your ai model separately for different demographic groups. Identify discrepancies in performance metrics that indicate bias against specific groups.

Then, use fairness metrics to quantify the level of bias in your system and track progress towards mitigation. Common fairness metrics include demographic parity (ensuring outcomes are similar across groups), equalized odds (ensuring true positive and false positive rates are similar), and predictive parity (ensuring positive predictive values are similar). These metrics help ensure that the ai model is not unfairly discriminating against any particular group.

It's also important to solicit feedback from users representing a wide range of backgrounds and perspectives on the fairness and accuracy of your content moderation system. This can provide valuable insights into how the system is perceived by different groups.

Then, conduct user testing to observe how different groups interact with the system and identify potential pain points related to bias. This hands-on approach can reveal issues that might not be apparent from metrics alone.

Finally, establish a clear process for users to report biased outcomes and ensure that these reports are taken seriously and investigated thoroughly. This feedback loop is essential for continuous improvement and bias mitigation.

By systematically auditing your training data, evaluating algorithm performance, and gathering diverse user feedback, you can take meaningful steps towards identifying and addressing sources of bias in your content moderation systems.

Next, we will explore strategies for mitigating bias in ai content moderation.

Strategies for Mitigating Bias in AI Content Moderation

Is your ai content moderation system unintentionally silencing certain voices? By implementing proactive strategies, you can create a fairer environment for all users.

Mitigating bias in ai content moderation requires a multi-faceted approach. It's not enough to simply identify bias; you must actively work to reduce its impact. Here are some key strategies to consider:

One of the most effective ways to mitigate bias is by ensuring your training data is diverse and representative. This means actively seeking out and incorporating data sources that accurately reflect the demographics and perspectives of your user base.

- Incorporate diverse data sources: Actively seek out and incorporate diverse data sources to improve the representation of underrepresented groups. For example, if your content moderation system struggles with detecting hate speech in languages other than English, you should incorporate more training data from those languages.

- Use data augmentation techniques: Use data augmentation techniques, such as synthetic data generation, to balance the dataset and address representation gaps.

- Be mindful of potential biases in data augmentation methods: Ensure that they do not introduce new forms of unfairness.

In addition to data diversity, algorithmic fairness techniques can help reduce bias in your ai models. These techniques involve modifying the algorithm itself to promote fairer outcomes.

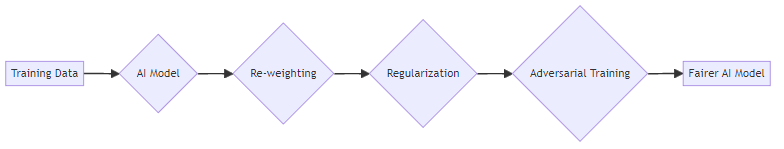

- Employ algorithmic fairness techniques: Employ algorithmic fairness techniques, such as re-weighting, regularization, and adversarial training, to reduce bias in your ai model.

- Re-weighting: Adjusts the importance of different data points to ensure balanced representation and outcomes.

- Regularization: Adds constraints to the model's learning process to prevent it from overfitting to biased patterns in the data.

- Adversarial training: Uses a second ai model to challenge the primary model, forcing it to become more robust against biased predictions.

- Carefully consider the trade-offs: Carefully consider the trade-offs between fairness and accuracy when selecting and implementing these techniques.

- Regularly evaluate the impact: Regularly evaluate the impact of fairness interventions on the overall performance and fairness of your system.

Finally, human oversight and explainability are crucial for ensuring fairness in ai content moderation. Even the most sophisticated ai systems can make mistakes, and human moderators are needed to review and correct potentially biased decisions.

- Implement human-in-the-loop moderation processes: Implement human-in-the-loop moderation processes to review and correct potentially biased decisions made by the ai system.

- Use explainable ai (XAI) techniques: Use explainable ai (XAI) techniques to understand the reasoning behind the ai's decisions and identify potential sources of bias.

- Provide clear explanations to users: Provide clear explanations to users about why their content was flagged or removed, fostering transparency and building trust.

By implementing these strategies, you can take meaningful steps towards mitigating bias in your ai content moderation systems and creating a fairer online environment for all.

Building Ethical Frameworks and Guidelines

It's easy to assume ai is unbiased, but without a solid ethical foundation, ai content moderation can perpetuate societal inequities. So, how can social media professionals ensure fairness in ai?

A code of ethics provides a framework for responsible ai development and deployment. It guides decisions, ensures accountability, and promotes user trust.

- Establish clear ethical principles to guide ai content moderation systems. These principles should prioritize fairness, transparency, and respect for user rights. For example, an ai system should not disproportionately censor content from marginalized communities or unfairly favor certain viewpoints.

- Consult with ethicists, legal experts, and community stakeholders. Diverse perspectives ensure the code of ethics reflects a broad range of values and concerns. This collaboration helps identify potential biases and unintended consequences early in the development process. Examples of community stakeholders include user groups, advocacy organizations, academic researchers, and subject matter experts.

- Regularly review and update the code of ethics to adapt to evolving societal norms and technological advancements. This ensures that the guidelines remain relevant and effective over time.

Transparency and accountability are crucial for building trust in ai content moderation systems. Users need to understand how decisions are made and have recourse if they believe those decisions are unfair.

- Publicly disclose content moderation policies and processes. This includes how ai is used to enforce these policies. For example, explain the types of content that are prohibited and the criteria used to flag or remove content.

- Establish clear channels for users to appeal content moderation decisions. Provide timely responses to inquiries. This ensures users have a voice and can challenge decisions they believe are unjust.

- Regularly audit ai systems for bias. Publish audit results to demonstrate transparency and accountability. These audits should assess the accuracy and fairness of the system across different demographic groups.

Addressing bias in ai content moderation requires a collective effort. Sharing knowledge and best practices helps organizations learn from each other and develop more effective solutions.

- Participate in industry-wide discussions and collaborations to share best practices for mitigating bias in ai content moderation. This includes sharing insights, challenges, and successful strategies with peers.

- Support research and development efforts aimed at creating fairer ai systems. This can involve funding research projects, contributing data, or participating in collaborative research initiatives.

- Contribute to open-source projects. Share tools and resources to help others address bias in their content moderation systems. Open-source contributions promote transparency, collaboration, and innovation in the field.

By building ethical frameworks, promoting transparency, and fostering collaboration, social media professionals can take meaningful steps towards creating fairer and more equitable ai content moderation systems.

Leveraging AI for Good: Proactive Bias Detection with Social9

Is your social media content unintentionally reinforcing bias? Social9 can help you create engaging, inclusive content that resonates with a diverse audience.

Social9's AI-Powered Generation tool simplifies the process of creating engaging social media content. It helps you generate posts, captions, and hashtags quickly and easily. Let's explore some of the ways to make your social media more engaging with this tool.

Social9 helps you create engaging social media content with ease. It generates posts, captions, and hashtags that capture attention and drive interaction.

It generates posts, captions, and hashtags that drive engagement, saving you time and effort. This allows you to focus on strategy and community building.

Our ai is trained on diverse datasets, minimizing bias and promoting inclusivity in your content. This ensures that your message resonates with a wide range of audiences.

Social9's Smart Captions and Hashtag Suggestions features are designed to optimize your content for maximum impact. These tools leverage ai to help you craft compelling text and reach a broader audience. Let's explore how social media marketing can be improved with these features.

Utilize Social9's Smart Captions feature to enhance your posts with compelling and relevant text. This helps you create captions that capture attention and convey your message effectively.

Our Hashtag Suggestions tool ensures that your content reaches a wider audience by recommending trending and niche-specific hashtags. This increases your visibility and engagement.

Benefit from ai-driven insights that help you optimize your content for maximum impact. This ensures that your posts are seen by the right people at the right time.

Social9 provides a comprehensive suite of tools and support to help you create effective social media content. From content templates to 24/7 support, Social9 is your all-in-one solution for social media success. Let's explore the various aspects of this support.

Make use of Social9's Content Templates to create visually appealing and consistent posts. This helps you maintain a cohesive brand image and attract followers.

Our 24/7 Support ensures that you always have assistance when you need it. Whether you have a question or need help troubleshooting, our team is here to assist.

Social9 is your all-in-one solution for creating engaging and effective social media content. This makes it easy to manage your social media presence and achieve your goals.

With Social9, you can proactively detect and mitigate bias in your social media content, ensuring that your message is inclusive and resonates with a diverse audience.

The Future of AI Content Moderation: Towards a More Equitable Online Experience

Ai content moderation is constantly evolving. What trends are shaping the future of online experiences and how can social media professionals prepare?

Algorithmic fairness research continues to advance, seeking innovative ways to reduce bias in ai models. These advancements aim to create systems that are more equitable and transparent in their decision-making.

Federated learning is gaining traction. This is a privacy-preserving technique that trains ai models on diverse data without compromising user privacy.

Interpretability and explainability are getting more emphasis. This is to build trust and transparency in ai systems by making their decision-making processes more understandable.

Governments and regulatory bodies are playing a more active role. They are setting standards and guidelines for ai content moderation to ensure fairness and accountability.

Legislation is being developed to promote fairness, transparency, and accountability in ai systems. This is to protect users from biased outcomes.

International cooperation is vital to address bias in ai on a global scale. This helps ensure that ai systems adhere to ethical principles and do not perpetuate discrimination.

Users are gaining more control over their content moderation experience. This includes the ability to customize filters and report biased outcomes.

Fostering a culture of inclusivity and respect within online communities is crucial. This helps create a safe and welcoming environment for all users.

Promoting media literacy and critical thinking skills is essential. This helps users identify and challenge biased content.

By staying informed about these emerging trends, social media professionals can contribute to a more equitable online experience for everyone.

Conclusion

Bias in ai content moderation poses an ongoing challenge. Mitigating it requires constant vigilance and a multifaceted strategy.

- Acknowledge the complexity: Recognize that bias stems from various sources, including data, algorithms, and human oversight.

- Prioritize ethics: Use ethical frameworks to guide ai development and deployment.

- Foster collaboration: Encourage knowledge sharing to develop effective solutions.

By embracing ethics, prioritizing inclusion, and fostering collaboration, we can create equitable online experiences.