Explainable AI (XAI) for Content Creation: Demystifying the Black Box

The Rise of AI in Content Creation

AI's arrival is shifting content creation for businesses. It's no longer a question of if ai will impact content, but how content creators can harness this technology to improve their content strategy.

ai tools are rapidly changing how content is created. From generating text and images to editing videos, ai offers content creators new capabilities.

- Speed and Efficiency: ai writing assistants can quickly draft blog posts or social media captions. Automated video editors can create compelling content in less time, freeing up creators for strategic tasks.

- Scalability: ai enables creators to produce large volumes of content without sacrificing quality. This is especially useful for businesses needing to maintain a consistent presence across multiple platforms.

- Personalization: ai algorithms can analyze user data to tailor content to specific audiences. This leads to higher engagement and better conversion rates.

For example, ai-driven image generators allow marketers to create visuals for ad campaigns in minutes. This enhances productivity and empowers content creators to focus on strategy and audience engagement.

Despite the benefits, ai in content creation also introduces challenges. One major issue is the lack of transparency in how ai algorithms make decisions.

- Understanding AI Decisions: Content creators often struggle to understand how ai algorithms arrive at their outputs. This "black box" nature makes it difficult to assess the quality and accuracy of the content.

- Bias Concerns: Without transparency, it's hard to identify and correct potential biases in ai-generated content. This can lead to unfair or discriminatory content that damages a brand's reputation.

- Ethical Considerations: The lack of transparency raises ethical questions about accountability and responsibility for ai-generated content. If an ai produces harmful or misleading content, who is to blame?

Transparency is essential for building trust, avoiding biases, and meeting ethical guidelines. Authenticity and accountability are key to building trust.

- Building Trust: Audiences value authenticity and transparency. Content creators need to understand how ai is used in their content to maintain credibility.

- Avoiding Biases: Transparency helps ensure fairness and inclusivity. Understanding ai decision-making prevents the creation of biased content.

- Meeting Compliance: Ethical ai guidelines are becoming increasingly important. Transparency ensures content creators adhere to these standards.

As ai continues to evolve, understanding and addressing these transparency concerns will be crucial for responsible and effective content creation. The next section will dive deeper into explainable ai (XAI) and how it offers a solution to these challenges.

What is Explainable AI (XAI)?

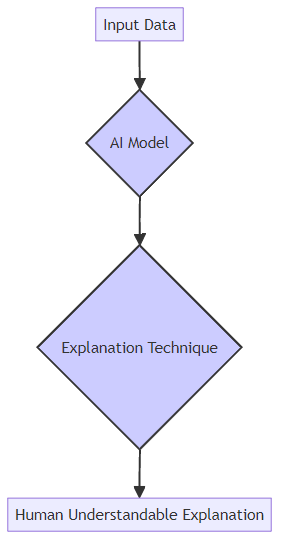

Explainable ai (XAI) is about making ai understandable. Think of it as opening the "black box" to see how ai arrives at its decisions.

While often used interchangeably, explainability, interpretability, and transparency have distinct meanings, and knowing the difference really helps content creators.

- Explainability focuses on clarifying ai's internal functions, making it easier to grasp how the system works. It's about understanding the general mechanics. For example, knowing that a language model uses transformers to process text is a form of explainability. This is crucial for building trust in ai models, according to IBM.

- Interpretability centers on understanding the reasons behind a specific ai's decision. It's about figuring out why the ai made a particular choice. For instance, if an ai suggests a specific headline, interpretability would tell you which words or phrases in your original draft led to that suggestion. This is super important for content creators who need to justify their choices.

- Transparency refers to understanding how inputs are processed to produce outputs. It involves seeing the flow of information within the ai model, like a clear pipeline. Knowing that customer demographics are fed into a personalization algorithm to generate tailored emails is an example of transparency.

XAI aims to achieve several key goals, each contributing to more effective and trustworthy ai systems.

- Justification: XAI enhances trust in ai-driven content decisions. By understanding the rationale behind ai suggestions, content creators can confidently support their choices. You can say, "The ai recommended this because it analyzed X, Y, and Z factors."

- Control: XAI helps identify vulnerabilities and flaws in ai models. This understanding allows for better control and debugging of ai systems. If an ai is making weird suggestions, XAI can help you figure out why and fix it.

- Improvement: XAI pinpoints areas for continuous model refinement. By analyzing ai's decision-making process, developers can improve the model's accuracy and reliability. This means better ai tools for you over time.

- Discovery: XAI generates new ideas and hypotheses for content strategy. Understanding ai insights can spark innovative approaches to content creation. Maybe the ai highlights a connection you never would have seen.

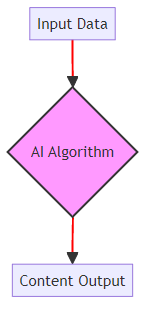

Traditional ai often operates as a "black box," arriving at results without clear explanations. In contrast, XAI seeks to provide transparency and understanding at every step.

- ai uses machine learning algorithms to arrive at results without fully understanding how the algorithm reached that decision.

- XAI implements specific techniques to ensure that each decision made during the machine learning process can be traced and explained.

- Benefits of XAI include accuracy, control, accountability, and auditability, leading to more reliable and trustworthy ai systems.

Understanding XAI is the first step toward harnessing its power. The next section will cover the techniques and methods that turn ai into XAI.

XAI Techniques for Content Creation

XAI techniques are revolutionizing content creation, but understanding the specific methods is crucial. Let's explore how these techniques break down ai's decision-making process, making it more transparent and controllable.

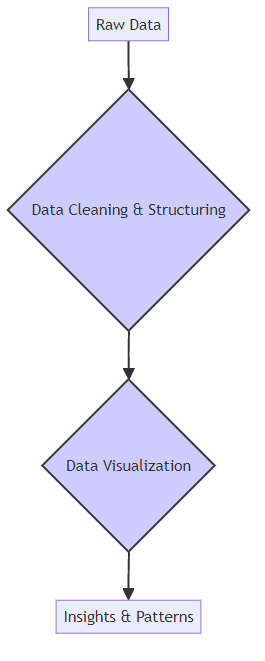

Pre-modeling explainability sets the stage for effective ai by focusing on data preparation. It involves:

- Understanding data structure, audience characteristics, and trends: This initial step ensures content creators know their raw material. For example, a retail company analyzes customer purchase history to understand peak shopping times and popular product categories. This helps them know what kind of content will actually land.

- Using data visualization to identify patterns and insights: Visual tools like charts and graphs reveal hidden trends. A healthcare provider might use visualization to spot patterns in patient demographics and treatment outcomes, guiding content for targeted health campaigns. Seeing the data visually makes it way easier to grasp.

- Transforming data into a usable format for ai models: Raw data is often messy and needs cleaning and structuring. A financial firm transforms raw market data into time-series data to train ai models for predicting stock prices. You can't just throw anything at an ai and expect good results.

These models are designed to be understandable from the start, making ai's logic clear.

- Developing models with inherent understandability: These models prioritize transparency over complexity. A rule-based system in a social media platform might use simple rules to flag inappropriate content, making moderation decisions easily traceable. You can see the exact rule that was triggered.

- Using decision trees to clarify the prediction process: Decision trees visually map out decision paths. An e-commerce platform uses a decision tree to determine which product recommendations to show based on customer browsing history. It's like a flowchart for the ai's thinking.

- Combining complex black-box models with interpretable models: This approach balances accuracy with transparency. You can use a simpler, more transparent model on top of a more complex one to explain the outcome. It's like having a translator for the complicated ai.

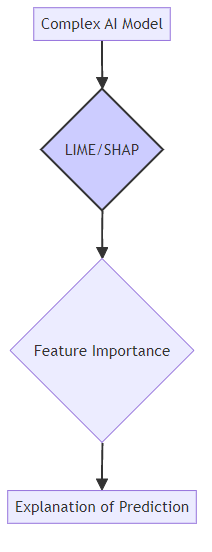

Post-modeling explainability dissects complex ai models after they're built.

- Breaking down complex AI models for easier understanding: These techniques make black boxes more transparent. This is useful for both content strategy and regulatory compliance. It's like getting a post-mortem on the ai's decision.

- Visualization, textual justification, simplification, feature relevance: These are key techniques for explaining ai decisions.

- Visualization: Think charts and graphs that show why an ai made a certain recommendation. For example, a graph might show which keywords were most influential in generating a blog post title.

- Textual Justification: This is when the ai provides a written explanation for its output. It could be something like, "I suggested this headline because it includes keywords related to 'sustainability' and 'eco-friendly,' which are trending topics for your audience."

- Simplification: This involves taking a complex ai decision and breaking it down into simpler terms. If an ai recommends a specific content format, simplification would explain why that format is likely to perform well based on audience behavior.

- Feature Relevance: This technique highlights which input features (like specific words, user demographics, or past engagement data) had the most impact on the ai's output. For example, it might show that the mention of "free shipping" was the primary driver for a product recommendation.

- Tools: LIME (Local Interpretable Model-Agnostic Explanations), SHAP (Shapley Additive Explanations): These tools help explain individual predictions.

- LIME (Local Interpretable Model-Agnostic Explanations) explains individual predictions of any machine learning model by approximating it locally with an interpretable model. So, if an ai flags a piece of text as potentially problematic, LIME can tell you which specific words or phrases contributed most to that decision.

- SHAP (Shapley Additive Explanations) is another method that assigns each feature an importance value for a particular prediction. It's like a fair way to distribute the "credit" for the ai's output among the different input factors. For content creators, this means understanding which elements of your input data are most influencing the ai's suggestions.

Understanding these XAI techniques empowers content creators to use ai responsibly and effectively. Next, we'll explore how XAI can mitigate biases in ai-driven content.

Practical Applications of XAI in Content Creation

XAI is not just theory; it's a toolkit ready for content creators today. Let's explore how to harness XAI to boost content quality and ethics in practical ways.

AI-powered tools like Social9 can spark creative content ideas. But how do you ensure the ai's suggestions align with your brand and values?

- Leveraging AI-Powered Generation: Use ai to brainstorm captions, but don't blindly accept the results. Analyze the ai's suggestions to understand its approach and refine the output. For example, if the ai suggests a caption that's a bit too informal for your brand, XAI can help you see why it chose that tone, so you can adjust.

- Using Smart Captions: Look for platforms that offer "smart" caption features. These provide insights into the ai's reasoning behind the suggested text, giving you control over the message. You can see what elements of your image or topic the ai focused on.

- Optimizing Hashtag Suggestions: Hashtags boost visibility, but relevance is key. Use XAI to understand why the ai suggests specific hashtags, ensuring they align with your content and target audience. It can show you if the ai is picking hashtags based on broad popularity or specific niche relevance.

Ad copy needs to resonate with diverse audiences. But unintentional biases can creep in if you aren't careful.

- Identifying and Mitigating Potential Biases: Use XAI to scrutinize ad copy for biased language or imagery. Ensure your message is inclusive and doesn't perpetuate harmful stereotypes. XAI can highlight phrases that might be unintentionally exclusionary.

- Ensuring Fair and Inclusive Representation: XAI can help you evaluate whether your ad campaigns fairly represent different demographics. This promotes ethical marketing and avoids alienating potential customers. It can analyze the sentiment and representation in visuals or text.

- Improving Brand Reputation: Promoting ethical ai practices enhances your brand's image. Customers value transparency and social responsibility.

Influencer marketing can be a powerful tool, but measuring its true impact can be difficult. XAI helps you understand what's really driving results.

- Analyzing the Factors Driving Campaign Success: Use XAI to dissect which aspects of an influencer's content resonated most with the audience. Was it the message, the visuals, or the influencer's personal brand? XAI can break down engagement metrics by content element.

- Identifying the Most Impactful Influencers and Content Strategies: XAI can reveal which influencers and content types delivered the highest engagement and conversions. This helps you refine your influencer strategy for future campaigns. You can see why one influencer performed better than another.

- Optimizing Future Campaigns: Use XAI insights to make data-driven decisions about influencer selection, content creation, and campaign targeting.

By using XAI, content creators can fine-tune their strategies for maximum impact. The next section will discuss mitigating bias in ai-driven content for more equitable and inclusive creations.

Addressing the Challenges of Implementing XAI

One of the main hurdles in using Explainable AI (XAI) is ensuring that everyone understands it, from developers to content creators. Let's explore some of these challenges.

Developers and content creators often see XAI differently. Developers might focus on the technical details, while content creators need to understand how it impacts the content itself.

- Ensuring Acceptable Explanations: XAI explanations need to be trustworthy for both groups. For developers, this might mean detailed metrics. For content creators, it could mean clear reasons why an ai suggested a particular phrase. If the explanation isn't clear or useful, it's not really helping.

- Bridging the Knowledge Gap: Developers have technical ai knowledge, but content creators are experts in audience engagement. Bridging this gap is essential for effective collaboration. You need both perspectives to make XAI work.

- Focusing on Plausibility: Content creators often prioritize real-world applicability and holistic information. This means the ai's suggestions need to align with real-world scenarios and consider various factors. The term "clinical plausibility" is a bit out of place here; it's more about whether the ai's output makes sense in the actual context of content creation and the target audience's reality.

How do we know if XAI is actually working? Measuring its effectiveness is a challenge.

- Developing Standardized Approaches: We need ways to measure XAI effectiveness. This could involve metrics for transparency, accuracy, and user trust. How do we quantify "explainability"?

- Increasing Workflow Integration: XAI should be a seamless part of content creation. It shouldn't be a separate, confusing step. It needs to fit naturally into how you already work.

- Improving Communication: Content creators, researchers, and ai developers need to communicate effectively. This ensures everyone is on the same page regarding XAI's goals and limitations.

ai can sometimes rely on irrelevant information.

- Understanding Unsupervised Learning: Unsupervised learning models can pick up on irrelevant features. This means an ai might suggest a topic based on a minor detail rather than genuine relevance. It's like the ai getting distracted by shiny objects.

- Mitigating Clever Hans Effects: Explainable ai can help detect and mitigate these "Clever Hans" effects. This ensure the ai is using meaningful data. A "Clever Hans" effect is when an ai appears to be performing a task intelligently, but it's actually responding to some unintended cue in the data.

- Ensuring Model Robustness: Models need to be reliable across different data subgroups. This prevents bias and ensures fair, accurate content. If the ai only works well for one type of audience, it's not very useful.

A recent study highlights the importance of collaboration between clinicians, developers, and researchers in designing explainable ai systems to improve their effectiveness, usability, and reliability in healthcare. This principle of collaboration is actually super relevant across industries, including content creation, where you need input from both the tech side and the creative side.

Addressing these challenges will help content creators harness the full potential of XAI.

Best Practices for Implementing XAI in Your Content Strategy

Implementing Explainable AI (XAI) into your content strategy doesn't have to be overwhelming. By adopting a few best practices, you can ensure your ai-driven content is transparent, ethical, and effective.

Begin your XAI journey with ai models that are easy to understand. Rather than jumping straight into complex neural networks, consider starting with decision trees or rule-based systems.

- These models offer inherent transparency, making it easier to trace how the ai arrives at its conclusions. For instance, a basic rule-based system could determine which keywords to use in a blog post based on predefined rules, allowing content creators to easily see why certain suggestions are made.

- As you gain experience and confidence, gradually incorporate more complex models. You can use simpler models to explain the output of more complex ones, creating a layered approach to ai implementation.

XAI is most valuable when it provides practical guidance for content improvement. Ensure that the explanations you receive from XAI tools translate into concrete steps for enhancing your content strategy.

- Use XAI to pinpoint specific areas for optimization, such as identifying why certain headlines perform better than others or determining which content formats resonate most with your audience. Connect these insights to measurable outcomes like engagement rates, conversion rates, and follower growth.

- For example, if XAI reveals that content with a specific tone performs better on LinkedIn, adjust your content calendar to prioritize similar posts on that platform. This ensures that XAI insights directly inform your content creation process.

Effective XAI implementation requires close collaboration with ai specialists. Content creators and ai developers should work together to ensure that ai models align with content goals.

- Provide feedback to ai developers on the relevance and usefulness of the explanations generated by XAI tools. This helps developers refine the models to provide more practical and actionable insights.

- Building a multidisciplinary team fosters a shared understanding of both the technical aspects of ai and the strategic goals of content creation. For example, content creators can help developers understand audience preferences, while developers can provide insights into the ai's decision-making process.

By following these best practices, you can effectively integrate XAI into your content strategy. This ensures your ai-driven content is transparent, ethical, and aligned with your business goals.

The next section will discuss mitigating bias in ai-driven content for more equitable and inclusive creations.

The Future of XAI and Content Creation

The realm of content creation is on the cusp of a transformative shift, thanks to the relentless march of ai. As XAI techniques evolve, they promise to deliver more nuanced and context-aware explanations.

Future ai advancements will bring concept-based explanations, allowing creators to understand the underlying ideas behind ai suggestions. Instead of just seeing keywords, you'll understand the abstract concepts the ai is working with.

Causality will enable ai to not only identify correlations but also explain cause-and-effect relationships in content performance. This means the ai could tell you, "This specific change in your call-to-action caused a 15% increase in conversions," not just that they're related.

Actionable XAI will provide direct, implementable insights, guiding creators on what specific changes to make for better results. It's like having a coach that tells you exactly what to do next.

Falsifiability will allow creators to test and validate ai explanations, ensuring reliability and trust. You'll be able to challenge the ai's reasoning and see if it holds up under scrutiny.

Explainable AI analyzes results after computation, while Responsible AI focuses on planning to ensure ethical algorithms before results. It's about being proactive rather than just reactive.

Fairness and debiasing will be critical, ensuring ai doesn't perpetuate harmful stereotypes in content. This is a huge one for ethical content creation.

Lifecycle automation will streamline ai processes, making responsible ai an integral part of content creation workflows. It'll be built-in, not an afterthought.

Continuous model evaluation will be essential, constantly monitoring ai performance to identify and correct biases. You can't just set it and forget it.

By optimizing business outcomes, XAI will directly contribute to ROI and strategic decision-making. It's not just about making pretty content; it's about making content that works.

Fine-tuning model development efforts based on continuous evaluation will ensure ai remains accurate, relevant, and ethical.

With ongoing advancements and responsible implementation, XAI can significantly enhance content creation.

Recap: The XAI Journey in Content Creation

We've journeyed through the evolving landscape of ai in content creation, starting from its initial impact and the challenges it presents, particularly the "black box" problem. We then dove into what Explainable AI (XAI) is, clarifying the distinctions between explainability, interpretability, and transparency, and how these concepts build trust, offer control, and drive improvement.

We explored various XAI techniques, from pre-modeling data preparation and understanding to inherently interpretable models and post-modeling analysis tools like LIME and SHAP. These techniques empower creators to understand why ai makes certain suggestions.

The practical applications of XAI were highlighted, showing how it can refine content generation, ensure fair representation in ad copy, and provide deeper insights into influencer marketing campaigns. We also touched upon the challenges in implementing XAI, such as bridging knowledge gaps and ensuring the explanations are practical and relevant.

Finally, we looked ahead to the future of XAI, anticipating advancements like concept-based explanations, causality, and actionable insights that will further integrate ethical and effective ai into our content strategies.

By embracing XAI, content creators can move beyond simply using ai tools to truly understanding and leveraging them, leading to more transparent, ethical, and impactful content.